A product leader at Google recently posted on Linkedin claiming that their LLM, Bard, would improve your productivity by translating images to LaTeX.1 I used LaTeX all the time in my research, so I was in an excellent position to evaluate this claim. To put it mildly, I disagreed with that assessment.

At the same time, I’ve been looking for an excuse to try Claude 2, the latest LLM from Anthropic AI, which is a direct competitor to OpenAI.

I fed Claude 2 and Bard the same set of equations in image form and will discuss how good I thought their responses were. In short, I thought Claude 2 increased my productivity, whereas Bard decreased it. On the other hand, both the Claude 2 and Bard chatbots are free to use. In contrast, ChatGPT Plus costs $20/month.2

Setup

I regenerated the image of equations used in the original post.3 See the appendix for my LaTeX script and the LLMs' responses.

Then I gave Bard and Claude and same three prompts:

Translate this image to LaTeX

What packages do I need to use, if any, to produce this output?

I would like P to be in sans-serif font. Additionally, I would like B and v to be bolded.

I attached the image of the equations to the first prompt. It is identical to the prompt from the original post.

In my initial response, I pointed out that the original prompt does not get an LLM to tell you what packages or libraries you need to import. The purpose of the second prompt is to see if it can do that.

The final prompt addresses a subtlety. Capital P in the second equation from the top is in sans-serif font, whereas the rest of the equations are in the standard computer modern font. (Admittedly, this is undesirable. All of the Ps should be in the same font. However, this is how the image from the original post was.)

Evaluation

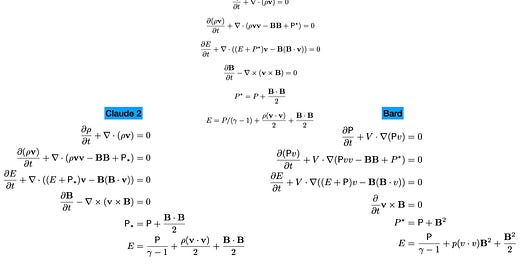

To start the evaluation, I use the code produced by Bard and Claude to generate equations. The figure below compares the equations generated with Claude and Bard to the “true value,” the image of equations I generated and fed into the LLMs with my first prompt. I used the code from the third response from the LLMs to generate their equation images.

Let’s start with what I liked from both LLMs. It makes sense to align the arrays of equations. (The equals signs from the LLMs are in vertical rows.) IMO, the original set of equations should have done this. I also think it’s acceptable that both LLMs used the \frac environment for the leftmost term on the right-hand side of the bottommost equation instead of using “/” to represent division. Lastly, I was happy that both LLMs could identify which packages needed to be used to make their code run. The initial prompt from the original post did not produce this response, whereas my second prompt did, highlighting the importance of prompt engineering in obtaining a functional response.

Moving onto Bard individually, there is a mistake in every single equation. This was also the case in the original copy the Google product leader advertised. Five of the six equations are qualitatively wrong, while the fifth equation from the top only misses a factor of two, and its notation “B-squared” is ambiguous. With the amount of work required to make all the needed corrections, you might as well write the code from scratch yourself.

In contrast, Claude 2 only makes one small mistake. Specifically, it writes the star associated with P as a subscript rather than a superscript. However, it is consistent about making this mistake such that the equations could be used as is, or a single find-and-replace-all would fix it. This is a noticeable improvement over the output from Bard! Claude is also consistent about writing P in sans-serif font, which is the natural interpretation of the instruction from my third prompt. On the flip side, not only is Bard inconsistent about the font and capitalization of P, it again confuses P and \rho, which are different variables. (Bard also wasn’t consistent about bolding v.)

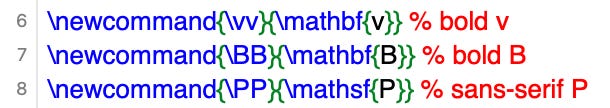

However, what I liked most about my experience here with Claude didn’t appear in the final output. Instead, I was most impressed with the code it wrote. In particular, it created commands for managing the font and style of variables without specifically being prompted to do so; see the figure below.

I usually only do this when writing long papers. However, retrospectively, it probably would have been faster for me to use Claude’s approach in this short example. Additionally, this approach is less prone to making mistakes and makes it easier to change styles. Granted, it’s only one example, but I was impressed by this and would certainly consider using Claude 2 again with an eye toward increasing my productivity.

Update

The first version of this post incorrectly stated that Claude 2 is multimodal. It is not. Thanks to

for pointing this out.This raises the interesting question of what’s happening under the hood when Claude is asked to “translate an image to LaTeX.” This topic could be another whole post, but I’ll try to summarize what I found.

Claude explicitly accepts .pdf, .txt, and .csv, among other file formats. I tried giving it a .png, but it did not take that. I tried experimenting with Claude at Emmanuel's suggestion to see how it parses text in a pdf file. The key limitation of Claude on this task is that it has to able to parse the pdf file. Although Claude provided me with detailed responses, I’m not sure I ultimately got to the bottom of how it parses equations within the text of a pdf. In any case, generating code from a pdf with text equations is likely more straightforward than doing so from a proper image. This may very well explain why Claude outperformed Bard in the first step of the comparison. That being said, this does not take away from Claude’s impressive performance on my subsequent prompts. Also, most equations generated using LaTeX reside on pdfs, so it still fits the stated use case of “translate this image to LaTeX.” Although, if a savvy arXiv user were interested in the LaTeX used to produce an image, they would download the source file, obviating the need to use an LLM in the original post altogether.

Appendix

LaTeX script

Here is the script I used to generate the equations in this post. The intention here was to reproduce the original input. If I were doing this from the start, I would have used the \align environment once instead of using the \equation environment six times. I also would have been consistent about the font for P.

\documentclass{article}

\usepackage{amsfonts}

\begin{document}

\begin{equation}

\frac{\partial \rho}{\partial t} + \nabla \cdot (\rho \mathbf{v}) = 0

\end{equation}

\begin{equation}

\frac{\partial (\rho \mathbf{v})}{\partial t} + \nabla \cdot (\rho \mathbf{v} \mathbf{v} - \mathbf{B} \mathbf{B} + \mathsf{P}^\star) = 0

\end{equation}

\begin{equation}

\frac{\partial E}{\partial t} + \nabla \cdot ((E + P^\star) \mathbf{v} - \mathbf{B} (\mathbf{B} \cdot \mathbf{v})) = 0

\end{equation}

\begin{equation}

\frac{\partial \mathbf{B}}{\partial t} - \nabla \times (\mathbf{v} \times \mathbf{B}) = 0

\end{equation}

\begin{equation}

P^\star = P + \frac{\mathbf{B} \cdot \mathbf{B}}{2}

\end{equation}

\begin{equation}

E = P / (\gamma - 1) + \frac{\rho (\mathbf{v} \cdot \mathbf{v})}{2} + \frac{\mathbf{B} \cdot \mathbf{B}}{2}

\end{equation}

\end{document}

Bard output

From the 1st prompt:

From the 2nd prompt:

From the 3rd prompt:

Claude 2 output

From the 1st prompt:

From the 2nd prompt:

From the 3rd prompt:

The astute reader will notice the input equations are not self-consistent.